Bio-inspired Vision-based Flying Robots (Completed)

Applying bio-inspired methods to indoor flying

robots for autonomous vision-based navigation.

*** A follow-up project can be found at this address. ***

Robotic vision opens the question of how

to use efficiently and in real time the large

amount of information gathered through the receptors. The

mainstream approach to computer vision based on a sequence

of pre-processing, segmentation, object extraction, and

pattern recognition of each single image is not viable

for behavioral systems that must respond very quickly

in their environments. Behavioral and energetic autonomy

will benefit from light-weight vision systems tuned to

simple features of the environment.

In this project, we explore an approach

whereby robust vision-based behaviors emerge out of the

coordination of several visuo-motor components that can directly

link simple visual features to motor commands. Biological

inspiration is taken from insect vision and evolutionary

algorithms are used to evolve efficient neural networks.

The resulting controllers select, develop, and exploit

visuo-motor components that are tailored to the information

relevant for the particular environment, robot morphology,

and behavior. |

|

|

Overview

Publications

301 Moved Permanently

301 Moved Permanently

CloudFront

|

Khepera in its arena with randomly arranged

b/w stripes

|

|

Evolving Neural Network

for Vision-based Navigation

The story started with a non-flying robot. Floreano et al.

(2001) demonstrated the ability of an evolved spiking neural

network to control a Khepera for

smooth vision-based wandering in an arena with randomly sized

black and white patterns on the walls. The best individuals

were capable of moving forward and avoiding walls very reliably.

However, the complexity of the dynamics of this terrestrial

robot is much simpler than that of flying devices, and we are

currently exploring whether that approach can be extended to

flying robots.

The Khepera equipped

with one of the best evolved neural network has been running

in an exposition at the Villa-Reuge during

8 months.

Video Clips

- Autonomous individual

(equipped with kevopic

board and embedded neural network) that evolved backward

movement whenever a frontal obstacle is too close (above

view: MPG, 12MB;

close view: MPG,

6MB; 25.02.04)

- One of the best individuals (AVI,

7.3MB, 05.2002)

Related Publications

301 Moved Permanently

301 Moved Permanently

CloudFront

|

Evolution

Applied to Physical Flying Robots: the Blimp

Evolving aerial robots brings a new set of challenges. The

major issues of developing (evolving, e.g. using goevo)

a control system for an airship, with respect to a wheeled

robot, are (1) the extension to three dimensions, (2) the impossibility

to communicate to a computer via cables, (3) the difficulty

of defining and measuring performance, and (4) the more complex

dynamics. For example, while the Khepera is controlled in speed,

the blimp is controlled in thrust (speed derivative) and can

slip sideways. Moreover, inertial and aerodynamic forces play

a major role. Artificial evolution is a promising method to

automatically develop control systems for complex robots, but

it requires machines that are capable of moving for long periods

of time without human intervention and withstanding shocks.

Those requirements led us to the development of the Blimp

2 shown in the pictures. All onboard electronic components

are connected to a microcontroller with a wireless connection to

a desktop computer. The bidirectional digital communication

with the desktop computer is handled by a Bluetooth

radio module, allowing more than 15 m range. The energy

is provided by a Li-Poly battery, which lasts more than 3 hours

under normal operation, during evolutionary runs with goevo.

For now, a simple linear camera is attached in front

of the gondola, pointing forward. We are currently working

on other kinds of micro-cameras. Other embedded sensors are

an anemometer for fitness evaluation, a MEMS gyro for yaw rotation

speed estimate, and a distance sensors for altitude measurements.

Video Clips

- Blimp

2b (evolved in simulation and

tested in reality)

- Autonomous run of the best individual evolved

in simulation (41st generation), tested in

reality (MPG,

7MB)

- The same individual is able to cope with

obstacle it never saw during evolution (MPG,

6.6MB; MPG,

6.8MB)

- This individual is also able to go backward

whenever facing an obstacle (MPG,

3MB; MPG,

9MB)

- Blimp 2b (slightly larger envelope, addition

of a yaw axis MEMS gyro)

- Autonomous run of the best individual

of the 24th generation (MPG,

3.3MB, 09.2003)

- Same individual demonstrating its capability

of getting out from stuck situations (MPG,

2.4MB, 09.2003)

- Blimp 2 (second version, fully rearranged

architecture)

- Random selected individual of the first generation

(MPG, 1.6MB,

04.2003)

- Autonomous run of the best individual of the 20th

generation (MPG,

13MB, 04.2003)

- What the blimp "sees" (AVI,

0.7MB, 04.2003)

- Blimp 1 (first version):

- Human piloted tour in a large environment (AVI,

3.7MB, 05.2002)

- Human piloted tour (AVI,

7.5MB, 03.2002)

- What the blimp "sees" with its horizontal

camera during moving in its environment (AVI,

400kB)

Related Publications

301 Moved Permanently

301 Moved Permanently

CloudFront

|

Blimp 2 (100g)

Blimp 2b in its environment

|

|

Model F2 (2004, 30g,

1.1m/s)

Non-robotic models by DIDEL:

Celine (2003,

10g, 1m/s)

miniCeline (2004,

6g, 0.86m/s, not shown)

Model C4 (2001, 50g,

1.4m/s)

|

|

Final

Goal: an Autonomous Vision-based Indoor Plane

In order to further demonstrate this concept,

we chose the indoor slow flyers as a well-suited test-bed because

of the need for very fast reactions, low power consumption,

and extremely lightweight equipment. The possibility

of flying indoor simplifies the experiments by avoiding the

effect of the wind and the dependence on the weather and allows

for modifying as needed the visual environment. Our new model

F2 (picture on the left) has the following characteristics:

bi-directional digital communication using Bluetooth,

overall weight of 30 g, 80 cm wing span, more

than 20 minutes autonomy, 1.1 m/s minimum flight speed,

minimum space for flying of about 7x7 meters, 2 or 3 linear

cameras, 1 gyro, 1 2-axis accelerometer).

With respect to the Blimp, that kind of airplanes are slightly

faster and have 2 more degrees of freedom (pitch and roll). Moreover,

they are not able to be evolved in a room. Therefore are we currently

working on a robotic flight simulator (see

below). Both physical and simulated indoor slow flyers are

compatible with goevo.

**Initial

experiments using optic-flow without evolutionary methods have

been carried out to demonstrate vision-based obstacle avoidance

with a 30-gram airplane flying at about 2m/s (model F2, see

pictures on the left). The experimental environment is a 16x16m

arena equipped with textured walls.

The behavior of the plane

is inspired from that of flies (see Tammero

and Dickinson,

The Journal of Experimental Biology 205, pp. 327-343, 2002).

The ultra-light aircraft flies mainly in straight motion while

using gyroscopic information to counteract small perturbations

and keep its heading. Whenever frontal optic-flow expansion

exceeds a fixed threshold it engages a saccade (quick turning

action), which consists in a predefined series of motor commands

in order to quickly turn away from the obstacle (see

video below). The direction (left or right) of the saccade

is chosen such to turn away from the side experiencing higher

optic-flow (corresponding to closer objects).

Two horizontal

linear cameras are mounted on the wing leading edge in order

to feed the optic-flow estimation algorithm running in the

embedded 8-bit microcontroller. The heading control including

obstacle avoidance is thus truly autonomous, while an operator

only controls the altitude (pitch) of the airplane via a joystick

and a Bluetooth communication link.

So far, the 30-gram robot has been able to fly

collision-free for more than 4 minutes without any intervention

regarding its heading. Only 20% of the time was engaged in

saccades, which indicates that the plane flew always

in straight trajectories except when very close to the walls.

During those 4 minutes, the aerial robot generated 50 saccades,

and covered about 300m in straight motion.

Seethis

poster for more information.

Video Clips

- Model

F2 (30g, min. 1.1m/s, Bluetooth, 2-3 linear cameras,

1 gyro, 1 2-axis accelerometer)

- Experiment of autonomous steering** (MPG, 26MB, 16.07.2004)

- Model F2 presentation (MPG, 19MB, 16.07.2004)

- Model "celine" (10g, 1m/s, IR-controlled,

no sensors)

- Remote

controlled flight of the 10g "celine" model

in an 7x4 m hotel room (MPG,

8MB, 10.2003)

- Other great videoclips of the "celine" can

be downloaded from this

page

- Model C4 and previous (50g, 1.4m/s, radio-controlled,

no sensors)

- Full length video clip of this model C4 and the previous

one (B) in flight (MPG,

38.7MB, 2001)

-

Some extracts from the above video

clip: Model C4, part I ( AVI,

2.5MB, 2001), part II ( AVI,

2.5MB, 2001), part III, with a person walking

behind the flying aircraft ( AVI,

4.2MB, 2001)

-

Full lenght video of the little television

report at the TV-news ( MPG,

27.7MB, 2001)

Related Publications

301 Moved Permanently

301 Moved Permanently

CloudFront

|

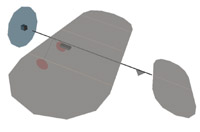

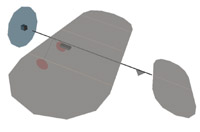

Robotic

Flight Simulator

A flight simulator based on Webots4 helps

us to speed up evolutionary runs and rapidly (up to 10x faster)

test new ideas. Using OpenGL and ODE (Open Dynamics Engine),

Webots4 is able to accurately simulate 3D motion with physical

effects like gravity, inertia, shocks, friction. Our blimp

dynamical model includes buoyancy, drag, Coriolis

and added mass effects (cf. Webots

official distribution for a simplified example of this

model). So far, we were able to demonstrate very good behavioral

correspondence between simulated Blimp 2b and its physical

counterpart (see movies below) when evolved with goevo.

At the moment of writing, a simple model of our indoor

slow flyer is under development. Our plan is to

have evolution taking place in simulation and the best-evolved

controllers being used to form a small population to be incrementally

evolved on the physical airplane with human assistance in

case of imminent collision. However, we anticipate that evolved

neural controllers will not transfer very well because the

difference between a simulated flyer and a physical one is

likely to be quite large. This issue will probably be approached

by evolving hebbian-like synaptic plasticity, which we have

shown to support fast self-adaptation to changing environments

(cf. Urzelai

and Floreano, 2000).

Video Clips

- Blimp

2b in simulation

- Autonomous run of on of the best evolved

individual. Fitness is only forward speed.

(MPG,

5.6MB, 21.12.2003)

- Autonomous run of on of the best evolved

individual. Fitness is forward speed only

when far from walls. (MPG,

2.9MB, 21.12.2003)

Related Publications

301 Moved Permanently

301 Moved Permanently

CloudFront

|

Blimp 2b in Webots

C4 in Webots

|

|

People Involved in this

Project

- Prof. Dario Floreano:

head of the lab and main advisor.

- DR. CNRS Franceschini,

Prof. Siegwart and

Prof. Nicoud are

member of the thesis comitee.

- Cyril Halter has been strongly involved in the development

of the indoor slow flyers, especially for the aerodynamics

and the building of the prototypes.

- Dr.

Jörg Kramer and Dr.

Shih-Chii Liu (INI)

for the design of aVLSI vision chips.

- André Guignard helped

with several micro-mechanical realizations.

- George Vaucher (ACORT)

for some PCB design and delicate BGA chip soldering

Visiting Students

Diploma Projects

- Alexis Guanella: Flight Simulator for Robotic Indoor Slow

Flyers (winter 2003-04)

- Emanuele Dati: New Sensors for Evolutionary Blimp 2 (winter

2003-04)

- Antoine Beyeler: redesign of the blimp mainboard (winter

2002-03)

- Tancredi Merenda: realization of the first blimp setup

(winter 2001-02)

Semester Projects

- Yannick Fournier: miniature CMOS cameras for indoor slow

flyer (summer 2003)

- Alexis Guanella: flying robot simulator (summer 2003)

- Yves Linder: miniature 2D cameras for indoor slow flyer

(winter 2002-03)

- Michael Bonani: development of a Bluetooth radio module

for the indoor slow flyer (summer 2002)

- Alexandre Goy: design and realization of a biomimetic vision

system (summer 2002)

|

|

|

|

|

|

|

More recent event anouncements can be found on this follow-up project webpage.

[19 March - 19 June 2005] Exhibition

of our artificially evolved Blimp at Musée Jenisch in Vevey, Switzerland.

[14 February 2005] Prof.

Srinivasan gives a talk at EPFL about honeybee

vision, navigation, "cognition" and applications to

autonomous vehicules.

[October 2004] We are technical

partner for an artistic

exhibition with blimps by knowbotic

research.

[18 June 2004] Seminar

by DR. Franceschini about insect-inspired control of micro

aircrafts.

[28 May 2004] The

fist Flying

Robots Contest at EPFL is based on blimp

plateforms and will take place within the Smartrob

contest.

[2 February 2004] Seminar

about indoor flying robots at Robopoly.

[17 December

2003] The fist Flying

Robots Contest at EPFL has officially started

with 5 teams of 3-4 people. Each team received

a subpart of the equipment we developped for

the Blimp 2.

[16 October 2003] Conference about

this project "Bio-inspired Flying Robots", followed

by demonstrations at the "espace

abstract", Rue de Genève 19, Lausanne.

[2-4 May 2003] Portes

ouvertes du 150e: démontration interactive du Blimp. Couloir

du CE, vers la salle polyvalente de l'EPFL.

[11 January 2003] Demonstration

at "Robots en ballade" in Pully, Switzerland.

[26 July 2001] Demonstration of

our models (B, C and GyroRover) and some of Mr. Szymanski (blimp,

mouse) in the salle polyvalente EPFL.

|

|

|

|

|

|

|