For hundreds of years mankind has been fascinated with machines that display life-like appearance and behaviour. The early robots of the 19th century were anthropomorphic mechanical devices composed of gears and springs that would precisely repeat a pre-determined sequence of movements. Although a dramatic improvement in robotics took place during the 20th century with the development of electronics, computer technology, and artificial sensors, most of the today robots used in factory floors are not significantly different from ancient automatic devices because they are still programmed to precisely execute pre-defined series of actions. Are these machines intelligent? In my opinion they are not; they simply reflect the intelligence of the engineers that designed and programmed them.

More than ten years ago, it became clear for some of us that the key to create intelligent robots consists of letting them evolve, self-organize, and adapt to their environment, just like all life forms on earth have done and keep doing. Evolutionary Robotics became the name used to define the collective effort by engineers, biologists, and cognitive scientists to develop artificial robotic life forms that display the ability to evolve and adapt autonomously to their environment. In this article I will show how we can evolve physical robots and show some examples of the intelligence that these robots develop.

The possibility of evolving intelligent robots through an evolutionary process had already been evoked in 1984 by the neurophysiologist Valentino Braitenberg in his truly inspiring booklet "Vehicles. Experiments in Synthetic Psychology". Braitenberg proposed a thought-experiment where one builds a number of simple wheeled robots with different sensors variously connected through electrical wires and other electronic paraphernalia to the motors driving the wheels. When these robots are put on the surface of a table, they will begin to display behaviours such as going straight, approaching light sources, pausing for some time and then rushing away, etc. Of course, some of these robots will fall off the table and will remain on the floor. All one needs to do is continuously pick a robot from the tabletop, build another robot just like that, and add the new robot to the tabletop. If one wants to maintain a number of robots on the table, it is necessary to copy-build at least one robot for every robot that falls from the table. During the process of building a copy of the robot, one will inevitably make some small mistake, such as inverting the polarity of an electrical connection or using a different resistance. Those mistaken copies that are lucky enough to remain longer on the tabletop will have a high number of descendants, whereas those that fall off the table will disappear for ever from the population. Furthermore, some of the mistaken copies may display new behaviours and have higher chance of remaining for very long time on the tabletop. You will by now realize that the creation of new designs and improvements through a process of selective copy with random errors without the effort of a conscious designer was first proposed by Darwin to explain the evolution of biological life on Earth.

However, the dominant view by mainstream engineers that robots were mathematical machines designed and programmed for precise tasks, along with the technology available at that time, delayed the realization of the first experiments in Evolutionary Robotics for almost ten years. In the spring of 1994 our team at EPFL, the Swiss Federal Institute of Technology in Lausanne (Floreano and Mondada, 1994) and a team at the University of Sussex in Brighton (Harvey, Husbands, and Cliff, 1994) reported the first successful cases where robots evolved without human intervention various types of neural circuits allowing them to autonomously move in real environments. The two teams were driven by similar motivations. On the one hand, we felt that a designer approach to robotics was inadequate to cope with the complexity of the interactions between the robot and its physical environment and with the brain circuits required for such interactions. Therefore, we decided to tackle the problem by letting these complex interactions guide the evolutionary development of robot brains subjected to certain selection criteria (technically known as fitness functions), instead of attempting to formalize the interactions and then designing the robot brains. On the other hand, we thought that by letting robots autonomously interact with the environment, evolution would exploit the complexities of the physical interactions to develop much simpler neural circuits than those typically conceived by engineers using formal analysis methods. We had plenty of examples from nature where simple neural circuits were responsible for apparently very complex behaviours. Ultimately, we thought that Evolutionary Robotics would not only discover new forms of autonomous intelligence, but also generate solutions and circuits that could be used by biologists as guiding hypotheses to understand adaptive behaviours and neural circuits found in nature.

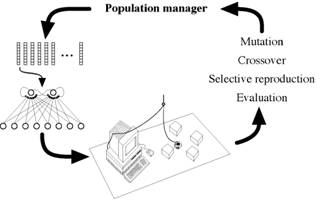

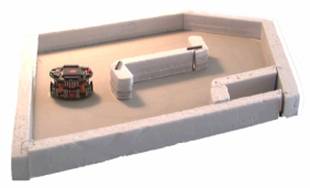

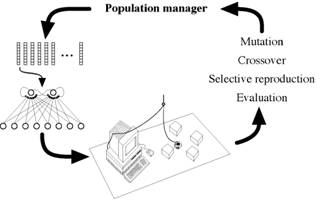

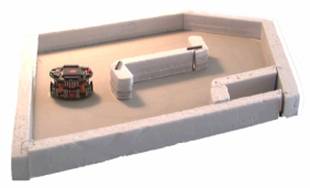

Figure 1. Left: Artificial Evolution of neural circuits for a robot connected to a computer. Right: The miniature mobile robot Khepera in the looping maze used during evolution.

In order to carry out evolutionary experiments without human intervention, at EPFL we developed the miniature mobile robot Khepera (6 cm of diameter for 70 grams) with eight simple light sensors distributed around its circular body (6 on one side and 2 on the other side) and two wheels (figure 1). Given its small size, the robot could be attached to a computer through a cable hanging from the ceiling and specially designed rotating contacts in order to continuously power the robot and let the computer keep a record of all its movements and neural circuit shapes during the evolutionary process, a sort of fossil record for later analysis. The computer generated an initial population of random artificial chromosomes composed of 0's and 1's that represented the properties of an artificial neural network. Each chromosome was then decoded, one at a time, into the corresponding neural network whose input neurons were attached to the sensors of the robots and the output unit activations were used to set the speeds of the wheels. The decoded neural circuit was tested on the robot for some minutes while the computer evaluated its performance (fitness). In these experiments, we wished to evolve the ability to move straight and avoid obstacles. Therefore, we instructed the computer to select for reproduction those individuals whose wheels moved on a similar direction (straight motion) and whose sensors had lower activation (far from obstacles). Once all the chromosomes of the population had been tested on the robot, the chromosomes of selected individuals were organized in pairs and parts of their genes were exchanged with small random errors in order to generate a number of offspring. These offspring formed a new generation that was again tested and reproduced several times. After 50 generations (corresponding to approximately two days of continuous operation), we found a robot capable of performing complete laps around the maze without ever hitting obstacles. The evolved circuit was rather simple, but still more complex than hand-designed circuits for similar behaviours because it exploited non-linear feedback connections among motor neurons in order to get away from some corners. Furthermore, the robot always moved in the direction corresponding to the higher number of sensors. Although the robot is perfectly circular and could move in both directions in the early generations, those individuals moving in the direction with less sensors tended to remain stuck in some corners because they could not perceive it properly and thus disappeared from the population. This represented a first case of adaptation of neural circuits to the body shape of the robot in a specific environment.

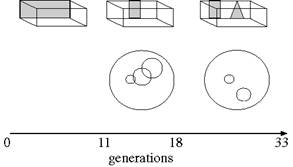

Figure 2. Left: The gantry robot developed at Sussex University is a suspended camera that can move on the plane and rotate. Right: Environments and evolved receptive fields across generations. The wide circle shows the entire visual field and the small circles represent the groups of pixels that are actually used by the evolved individuals.

The Sussex team instead developed a Gantry robot consisting of a suspended camera that could move in a small box along the x and y coordinates and also rotate on itself. The image from the camera was fed into a computer and some of its pixels were used as inputs to an evolutionary neural circuit whose output was used to move the camera. The artificial chromosomes encoded both the architecture of the neural network and the size and position of the pixel groups used as input to the network. The team used a form of incremental evolution whereby the gantry robot was first evolved in a box with one painted wall and asked to go towards the wall. Then, the size of the painted area was reduced to a rectangle and the robot was incrementally evolved to go towards the rectangle. Finally, a triangle was put nearby the rectangle and the robot was asked to go towards the rectangle, but avoid the triangle. A remarkable result of these experiments was that evolved individuals used only two groups of pixels to recognize the shapes by moving the camera from right to left and using the time of pixel activation as an indicator of the shape being faced (for the triangle, both groups of pixels become active at the same time, whereas for the rectangle the top group of pixels becomes active before the lower group). This was compelling evidence that evolution could exploit the interaction between the robot and its environment to develop smart simple mechanisms that could solve apparently complex tasks.

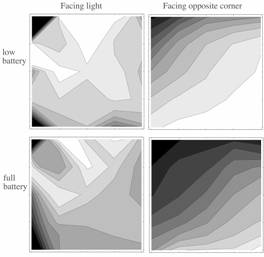

Figure 3. Left: A Khepera robot is positioned in an arena with a simulated battery charger (the black-painted area on the floor). The only illumination in the environemnt is provided by the light tower above the recharging station. Right: Activity levels of one neuron of the evolved individual. Each box shows the activity of the neuron (white = very active, black = inactive) while the robot moves in the arena (the recharging area is on the top left corner). The activity of the neuron reflects the orientation of the robot and its position in the environemnt, but is not affected by the level of battery charge.

The next question was whether more complex cognitive skills could be evolved by simply exposing robots to more challenging environments. To test this hypothesis, at EPFL we put the Khepera robot in an arena with a battery charger in one corner under a light source and let the robot move around as long as its batteries were discharged (Floreano and Mondada, 1996). To accelerate the evolutionary process, the batteries were simulated and lasted only 20 seconds; the battery charger was a black painted area of the arena and when the robot happened to pass over it, the batteries were immediately recharged. The fitness criterion was the same used for the experiment on evolution of straight navigation (figure 1), that is keep moving as much as possible while staying away from obstacles. Those robots that managed to find the battery charger (initially by chance) could live longer and thus accumulate more fitness points. After 240 generations, that is 1 week of continuous operation, we found a robot that was capable of moving around the arena, go towards the charging station only 2 seconds before the battery was fully discharged, and then immediately returning in the open arena. The robot did not simply sit on the charging area because it was too close to the walls and thus penalizing for the fitness criterion. When we analysed the activity of the evolved neural circuit while the robot was freely moving in the arena, we discovered that the activation of one neuron depended on the position and orientation of the robot in the environment, but not on the level of battery charge. In other words, this neuron encoded a spatial representation of the environment (sometime referred to as "cognitive map" by psychologists), similarly to some neurons that neurophysiologists discovered in the brain of rats exploring an environment.

Figure 4. Co-evolutionary prey (left) and predator (right) robots. Trajectories of the two robots (prey is white, predator is black) after 20, 45, and 70 generations.

Encouraged by these experiments, we decided to make the environment even more challenging by co-evolving two robots in competition with each other. The Sussex team had begun investigating co-evolution of predator and prey agents in simulation to see whether increasingly more complex forms of intelligence emerged in the two species and showed that the evolutionary process changed dramatically when two populations co-evolved in competition with each other because the performance of each robot depends on the performance of the other robot. In the Sussex experiments the fitness of the prey species was proportional to the distance from the predator whereas the fitness of the predator species was inversely proportional to the distance from the prey. Although in some evolutionary runs they observed interesting pursuit-escape behaviours, often co-evolution did not produce interesting result. At EPFL we wanted to use physical robots with different hardware for the two species and give them more freedom to evolve suitable strategies by using as fitness function the time of collision instead of the distance between the two competitors (Floreano, Nolfi, and Mondada, 2001). We created a predator robot with a vision system of 36 degrees and a prey robot that had only simple sensors capable of detecting an object at 2 cm of distance, but that could go twice as fast as the predator (figure 4). These robots were co-evolved in a square arena and each pair of predator and pray robots were let free to move for 2 minutes (or less if the predator could catch the prey). The results were quite surprising. After 20 generations, the predators developed the ability to search for the prey and follow it while the prey escaped moving all around the arena. However, since the prey could go faster than the predator, this strategy did not always pay off for predators. 25 generations later we noticed that predators watched the prey from far and eventually attacked it anticipating its trajectory. As a consequence, the prey began to move so fast along the walls that often predators missed the prey and crashed into the wall. Again, 25 generations later we discovered that predators developed a "spider strategy". Instead of attempting to go after the prey, they quietly moved towards a wall and waited there for the prey which moved so fast that could not detect the predator early enough to avoid it!

However, when we let the two robot species co-evolve for more generations, we realized that they rediscovered older strategies that were effective against the current strategies used by the opponent. This was not surprising. Considering the simplicity of the environment, the number of possible strategies that can be effectively used by the two robot species is limited. Even in nature, there is evidence that co-evolutionary hosts and parasites (for example plants and insects) recycle old strategies over generations. Stefano Nolfi, who worked with us on these experiments, noticed that by making the environment more complex (for example with the addition of objects in the arena) the variety of evolved strategies was much higher and it took much longer before the two species re-used earlier strategies (Nolfi and Floreano, 1998). An increasing number of people are working on co-evolutionary systems these days and I expect this to be a very promising direction for the development of like-like intelligence and the understanding of how biological species have evolved so far or have disappeared during the history of life on Earth.

Another interesting direction in Evolutionary Robotics is the evolution of

learning. In a broad sense, learning is the ability to adapt during life and

we know that most living organisms with a nervous system display some type of

adaptation during life. The ability to adapt quickly is crucial for autonomous

robots that operate in dynamic and partially unpredictable environments, but

the learning systems developed so far have so many constraints that are hardly

applicable to robots that interact with an environment without human intervention.

Of course, evolution is also a form of adaptation, but changes take place only

over generations and quite often evolved robots work fine as long as they are

in the environment where they have evolved. In a recent set of experiments,

we tried to evolve the mechanisms of neural adaptation (Floreano and Urzelai,

2000). The artificial chromosomes encoded a set of rules that were used to change

the synaptic connections among the neurons while the robot moved in the environment.

The results were very interesting. Not only we could evolve more complex skills,

such as the ability to solve sequential tasks that simple insects cannot solve,

but also the number of generations required was much smaller. But the most important

result was that evolved robots were capable of adapting during their "life"

to several types of environmental change that were never seen during the evolutionary

process, such as different light conditions, environmental layouts, end even

a different robotic body. Very recently, Akio Ishiguro and his team at the University

of Nagoya used a similar approach for a simulated humanoid robot and showed

that the evolved nervous system was capable of adapting the walking style to

different terrain conditions that were never presented during evolution (Fujii

et al., 2001). The learning abilities that these evolved robots display are

still very simple, but current research is aimed at understanding under which

conditions more complex learning skills could evolve in autonomous evolutionary

robots.

In the experiment described so far, the evolutionary process operated on the features of the software that controlled the robot (in most cases, in the form of an artificial neural network). The distinction between software and hardware is quite arbitrary and in fact one could build a variety of electronic circuits that display interesting behaviours. A few years ago, some researchers realized that the methods used by electronic engineers to build circuits represent only a minor part of all possible circuits that could be built out of a number of components. Furthermore, electronic engineers tend to avoid circuits that display complex, highly non-linear, and hard-to-predict circuits, which may be just the type of circuits that a behavioural machine requires! Adrian Thompson at the University of Sussex suggested to evolve electronic circuits without imposing any design constraints (Thompson, 1998). Adrian used a new type of electronic circuit, known as Field Programmable Gate Array (FPGA), whose internal wiring can be entirely modified in a few nanoseconds. Since the circuit configuration is a chain of 0's and 1's, he used this chain as the chromosome of the circuit and let it evolve for a variety of tasks, such as sound discrimination and even robot control. The evolved circuits used 100 times less components than circuits conceived for similar tasks with conventional electronic design and displayed novel types of wiring. Furthermore, evolved circuits were sensitive to environmental properties, such as temperature, which is usually a drawback in electronic design practice, but is a common feature of all living organisms. The field of Evolutionary Electronics was born and these days several researchers around the world use artificial evolution to discover new types of circuits or let circuits evolve to new operating conditions. For example, Adrian Stoica and his colleagues at NASA/JPL are designing evolvable circuits for robotics and space application (Stoica et al., 2001), while Tetsuya Higuchi and his team at the Electro-Technical Laboratory near Tokyo in Japan are already bringing to the market mobile phones and prosthetic implants with evolvable circuits (Higuchi et al., 1999).

In the early experiments on evolution of navigation and obstacle avoidance (figure 1), the neural circuits adapted over generations to the distribution of sensors of the Khepera robot. However, in nature the body shape and sensory-motor configuration is also subjected to an evolutionary process. Therefore, one may imagine a situation where the sensor distribution of the robot adapts to a fixed and relatively simple neural circuit. The team of Rolf Pfeifer at the AI laboratory in Zurich developed Eyebot, a robot with an evolvable eye configuration, to study the interaction between morphology and computation for autonomous robots (Lichtensteiger and Eggenberger, 1999). The vision system of Eyebot is similar to that of flies and is composed of several directional light receptors whose angle can be adjusted by motors. The authors evolved the relative position of the light sensors while using a simple and fixed neural circuit in a situation where the robot was asked to maintain a given distance from an obstacle. The experimental results confirmed the theoretical predictions: the evolved distribution of the light receptors displayed higher density of receptors toward the front than on the sides. The messages of this experiment are quite important: one the one hand the body shape plays an important role in the behaviour of an autonomous system and should be co-evolved with other aspects of the robot; on the other hand, computation complexity can be traded with a morphology adapted to the environment and behaviours of the robot.

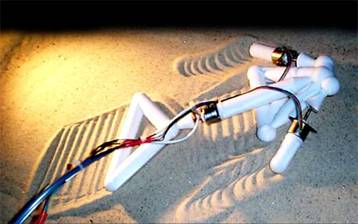

Figure 5. The Golem robot developed by DEMO lab of Brandeis UniversityThe morphology and neural circuit of this robot have been co-evolved and automatically built by a 3D thermoplastic printer.

The idea of co-evolving the body and the neural circuit of autonomous robots had already been investigated in simulations by Karl Sims (Sims, 1994), but only recently this has been achieved in hardware. Jordan Pollack and his team at Brandeis University have co-evolved the body shape and the neurons controlling the motors of robots composed of variable-length sticks whose fitness criterion is to move forward as far as possible (Lipson and Pollack, 2000). The chromosomes of these robots include specifications for a 3D printer that builds the bodies out of thermoplastic material. These bodies are then fitted with motors and let free to move while their fitness is measured. Artificial evolution generated quite innovative body shapes (see for example figure 5) that resemble biological morphologies such as those of fishes.

There are many more interesting results in Evolutionary Robotics, such as the

work on the evolution of circuit morphology for legged robots done by the team

of Jean-Arcady Meyer in Paris (Meyer et al., to appear), and the field is growing

very quickly, but we clearly have only scratched the tip of an iceberg. There

are a number of challenges ahead. A major challenge is what we call the "bootstrap

problem". If the environment or the fitness function is too difficult at

the beginning (so that all the individuals of the first generation have zero

fitness), evolution cannot select good individuals and make any progress. A

possible solution is to start with environments and fitness functions that become

increasingly more complex over time. However, this means that we must put more

effort in developing methods for performing incremental evolution that, to some

extent, preserve and capitalize upon previously discovered solutions. In turn,

this implies that we should understand what are suitable primitives and genetic

encoding upon which artificial evolution can generate more complex structures.

Another challenge is hardware technology. Despite the encouraging results obtained

in the area of evolvable hardware, many of us feel that we should drastically

reconsider the hardware upon which artificial evolution operates (Pfeifer, 2000).

This means that maybe we should put more effort in self-assembling materials

that give less constraints to the evolving system and thus facilitate the evolutionary

process.

The experiments conducted at EPFL were possible thanks to the collaboration with Francesco Mondada, Stefano Nolfi, Joseba Urzelai, Jean-Daniel Nicoud, and Andre Guignard. The author is grateful to the Swiss National Science Foundation for continuous support of the project.

Evolutionary Robotics is artificial evolution of control systems for autonomous robots that operate in physical environments. On this page you will find video clips of our evolved mobile robots. The whole evolutionary process is carried out entirely on physical robots without human intervention. The artificial chromosomes of the robots encode properties of their control systems which are artificial neural networks.

The video clips available on this page show some of the best controllers evolved under different environmental conditions, genetic encodings, and robotic platforms. The robots are fully autonomous. The cable that you see going out of the robot sends data to our workstation for data analysis. It is not used for controlling the robot.

Current videos:

Description: The small miniature robot Khepera was put in a looping maze. A population of genetically encoded neurocontrollers was randomly initialized and each individual was tested on the robot for some time while its fitness (performance) was measured. The fitness was a simple function saying: keep the wheels rotating and keep sensor activations low. The best individuals were selected, reproduced, mated, and mutated for several generations. The robot evolved alone in its cage without human intervention for several hours. The video shows one of the best individuals that has autonomously evolved the ability to navigate around the maze avoiding walls choosing its own speed and direction of motion.

Authors: Dario Floreano, Francesco Mondada

Literature: Floreano, D. and Mondada, F. Automatic Creation of an Autonomous

Agent: Genetic Evolution of a Neural Network Driven Robot. In D. Cliff,

P. Husbands, J.-A. Meyer, and S. Wilson (Eds.), From Animals to Animats

III, Cambridge, MA: MIT Press, 1994.

Controller data: Best individual of generation 45, born after 35 hours

View the Quicktime, 1.6Mb

View the Mpeg, 5.2Mb

Description: Using the same procedure described above, the Khepera robot is evolved in a new environment with a simulated battery charger under a light tower. Starting with random chromosomes and an even simpler fitness function than that used above, the robot evolves the ability to explore the environment, locate the battery charger, and periodically go to recharge. Notice that the simulated battery lasts only 20 seconds.

Authors: Dario Floreano, Francesco Mondada

Literature: Floreano, D. and Mondada, F. Evolution of Homing Navigation

in a Real Mobile Robot. IEEE Transactions on Systems, Man, and Cybernetics--Part

B: Cybernetics, 26(3), 396-407, 1996.

Controller data: Best individual of generation 240, born after 250 hours

View the Quicktime, 1.2Mb

View the Mpeg, 4.8Mb

Description: In this experiment, we explored the possibility of running incremental cross-platform evolution. After 100 generations of evolution on the Khepera, the last population was transferred on the larger robot Koala and incrementally evolved without changing the fitness function. In approaximately 20 generations, evolution re-adapted the neurocontrollers to the new robot body and morphology. In the video you see how carefully an incrementally evolved neurocontroller can steer the larger robot around a corner.

Authors: Francesco Mondada, Dario Floreano

Literature: Floreano, D., and Mondada, F. Evolutionary Neurocontrollers for Autonomous

Mobile Robots. Neural Networks, 11, 1461-1478, 1998.

Controller data: Best individual of generation 150, born after 120 hours

(first 100 hours on the Khepera)

View the Quicktime,

1.7Mb

View the Mpeg, 5.7Mb

Description: Instead of evolving artificial neural networks with fixed

synaptic weights, we evolved learning rules and other parameters of the synapses.

Everytime a neurocontroller is tested on the robot, the synaptic strengths are

randomly initialized and change online while the robots moves according to the

evolved learning rules specified in the chromosomes. In the video you will see

an evolved neurocontroller that learns in a few seconds to navigate in the environment.

Notice how gradually the robot learns not to crash into walls.

By the way, the evolved neurocontroller uses fast changing synapses that continuously

change over time also when the robot's behavior is stable and smooth. Read the

articles to know more.

Authors: Dario Floreano, Francesco Mondada

Literature: Floreano, D. and Mondada, F. Evolution of Plastic Neurocontrollers

for Situated Agents. In P. Maes, M. Mataric, J-A. Meyer, J. Pollack, and

S. Wilson. (Eds.), From Animals to Animats IV, Cambridge, MA: MIT

Press, 1996.

Controller data: Best individual of generation 25, born after 12 hours

View the Quicktime, 2.1Mb

View the Mpeg, 7.1Mb

Description: Two robots, a predator and a prey, are co-evolved within a square arena. The predator has a short-range sensors (1 cm) and a vision system, whereas the prey has only short-range sensors but can go twice as fast. Each robot is tested against the best individuals of the previous 5 generations for 40 seconds. The fitness for the predator was inversely proportional to the time needed to catch the prey, the fitness of the prey instead was proportional to the amount of time it managed to escape the predator. In about 25 generations (a few days of co-evolution on the real robots) we observe good chasing and escaping strategies, such as the one shown in this video sequence. If you observe the two robots for further generations, you can notice a variety of different behaviors. For example, in those generations where the prey moves very fast along the walls, the predator does not attempt to follow it but it simply backs up to a wall and there it waits for the prey that travels too fast to avoid it. We can run co-evolutionary experiments thanks to a set of triple rotating contacts.

Authors: Dario Floreano, Stefano Nolfi, Francesco Mondada

Literature: Floreano, D., Nolfi, S., and Mondada, F. Co-Evolution

and Ontogenetic Change in Competing Robots. Robotics and Autonomous

Systems, To appear, 1999.

View the Mpeg, 6.3Mb

Description: A modular architecture composed of behavioral components with adaptive parameters is evolved in an incremetnal fashion. Artificial evolution is used to develop the interconnections among the behavioral components and the activation levels of each behavioral component. In addition, components with adaptive parameters can learn on-line using local reinforcement signals. The architecture is evolved incrementally on a series of behavioral tasks. The robot is positioned in a rectangular arena with a light spot at one end. Under the light spot there are metallic contacts for recharging the robot battery (in the video sequence the charging lasts only 5 seconds). During the initial phase the a set of behavioral components are evolved to generate wandering, obstacle avoidance, light-following, and homing for charging. In the second phase, several small objects are scattered in the environment, the robot is equipped with a gripper, and the architecture is extended with additional behavioral modules for gripping objects. The system is incrementally evolved for finding objects, picking them up and releasing them outside a wall, while maintaining the ability to avoid walls and recharging when necessary. The video shows a full sequence of the best individual of the second phase. At the end the robot will go to recharge the batteries. Notice that the robot has only short-range sensors (1 cm) and can see walls and objects only when it is very close to them.

Authors: Joseba Urzelai, Dario Floreano

Literature: Urzelai, J., Floreano, D., Dorigo, M., and Colombetti, M.

Incremental Robot Shaping, Connection

Science, 10, 341-360, 1998.

View the Mpeg, 5.9Mb

Description: A walking robot was evolved in 3D simulation and built according to evolved specification. The artificial chromosome encoded the properties of a neural controller and some aspects of body and leg sizes. The fitness function was maximized by straight trajectory with obstacle avoidance and minimization of energy consumption. The robot could recharge its battery by sitting down. The robot has an array of infrared sensors on the front to detect obstacles. The physical robot was about a kilogram with microcontrollers, but no batteries. It was connected to the serial port of the workstation. The evolved controller was downloaded and tested on the physical robot. Despite the huge difference between the simulator and the physics of the robot, the controller can manage to keep the robot standing and produce a forward movement. This project is still open.

Authors: Jordi Porta, Olivier Michel, Jean-Daniel Nicoud, Dario Floreano

Literature: Not available

View:

an evolved individual in simulation Quicktime,

1.6 MB

an evolved individual downloaded on the physical robot, 2.8 MB Quicktime (the date shown on

the video is wrong because it was not reset after battery change: it should

be 1998, not 1990!)