The Eyebots

'A new swarm of indoor flying robots capable of operating in synergy with swarms of foot-bots and hand-bots'

08/08/11: Swarmanoid, The Movie receives the AAAI-2011 Best Video Award at the San Francisco annual event!

Introduction:

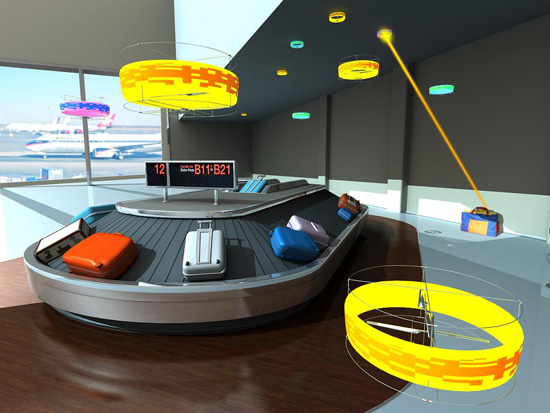

Eyebots are autonomous flying robots with powerful sensing and communication abilities for search, monitoring, and pathfinding in built environments. Eyebots operate in swarm formation, as honeybees do, to efficiently explore built environments, locate predefined targets, and guide other robots or humans (figure 1).

Figure 1: Right - artistic impression of the eye-bots used in an airport, Left - artistic impression of the eye-bots used in an urban house

Swarmanoid Project:

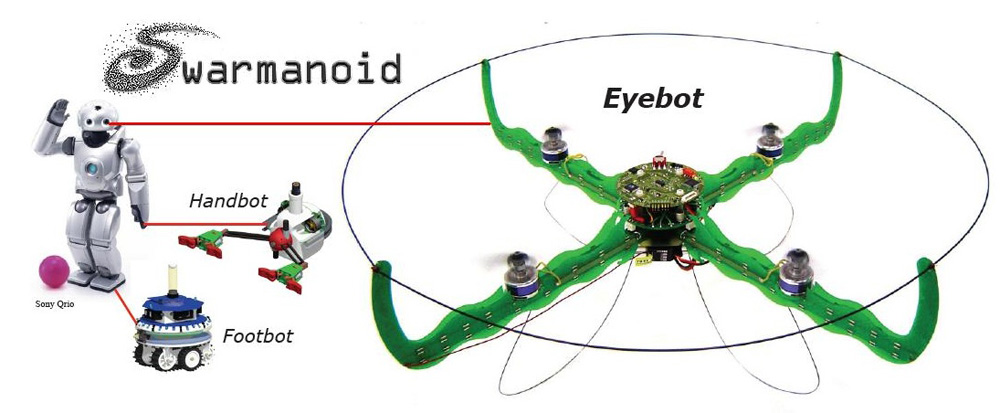

Eyebots are part of the Swarmanoid, a European research project aimed at developing an heterogeneous swarm of wheeled, climbing, and flying robots that can carry out tasks normally assigned to humanoid robots (figure 2). Within the Swarmanoid, Eyebots serve the role of eyes and guide other robots with simpler sensing abilities.

Figure 2: "From Humanoid to Swarmanoid", there are three types of robots that make up the Swarmanoid, the foot-bot (wheeled), hand-bot (climbing) and eye-bot (flying)

Eyebots can also be deployed on their own in built environments to locate humans who may need help, suspicious objects, or traces of dangerous chemicals. Their programmability, combined with individual learning and swarm intelligence, makes them rapidly adaptable to several types of situations that may pose a danger for humans. Click here to download the eye-bots project flyer.

Hardware Evolution:

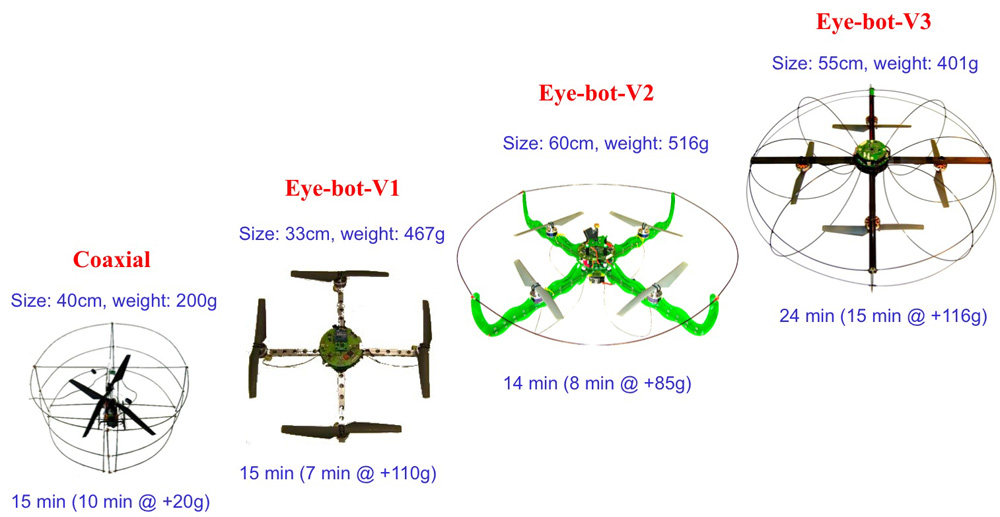

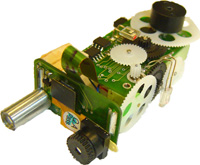

Initial development and testing started with a commercial based coaxial helicopter design. Since then several eye-bot prototypes have been developed based on a quadrotor design (figure 3).

Figure 3: Prototypes showing the evolution of the eye-bot platform design. Size and weight of the platform is defined above and the flight endurance is defined below (brackets indicate endurance at the given payload)

You can find more images of the eye-bot in the LIS gallery.

Control System:

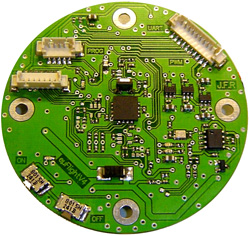

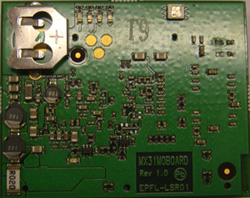

The system incorporates three levels of control, the low-level flight stability controller, middle-level autonomous controller and high-level guidance system (figure 4). The two lower levels are incorporated in a single printed circuit board which also contains 3D inertial sensing and connectivity for additional sensing. The guidance system is based on a custom developed (at the LSRO) i.MX31 floating point processor board running Linux.

Figure 4: Left - current prototype of the flight stability controller and the autonomous controller, Right - Linux based i.MX31 guidance system

Onboard Sensing:

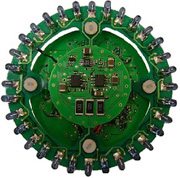

There are several onboard sensors to help the eye-bot navigate through an indoor environment and coordinate with other eye-bots (figure 5) including:

- Sonar and pressure sensing for altitude control

- Optical-flow for drift detection

- Magnetometer for heading determination

- 360° infrared distance scanner for collision detection and navigation

- Relative positioning sensor for swarm co-ordination and communication

- Custom 360° pan-tilt 3MP camera system

Figure 5: Left - 360° pan-tilt 3MP camera, Middle - 360° infrared distance scanner, Right - relative positioning sensor

Current Autonomous Capabilities:

The eye-bot has shown that it is capable of:

- Stable flight with automatic leveling

- Automatic take-off, altitude control and automatic landing

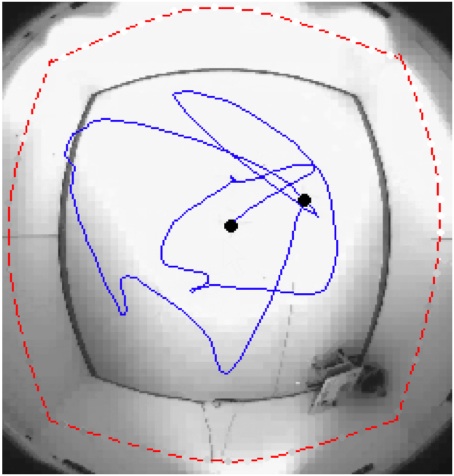

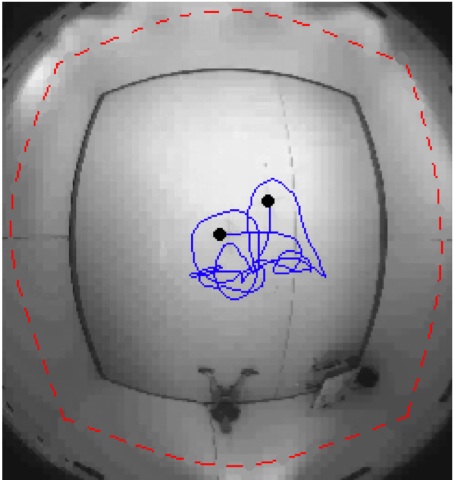

- Autonomous collision avoidance and anti-drift control (figure 6)

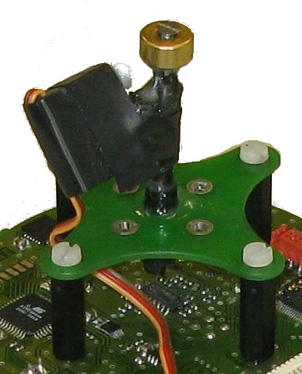

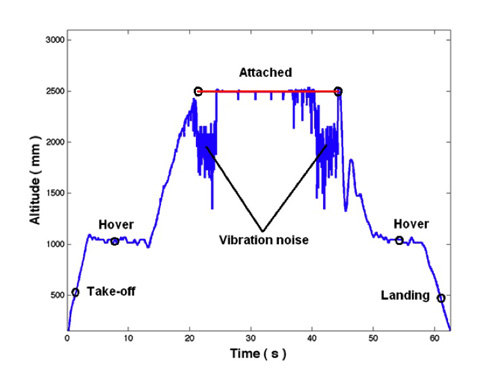

- Autonomous ceiling attachment & detachment (figure 7)

See publications section for more details.

Figure 6: Left - plotted eye-bot trajectory during a collision avoidane experiment, Right - plotted eye-bot trajectory during a anti-drift experiment (Note: images taken from a fish-eye lens, red dotted lines represent the square room walls at 1m altitude)

Figure 7: Left - vertical rod based ferro-magnetic ceiling attachment/detachment device, Right - logged altitude response during an autonomous ceiling attachment and detachment

Simulation:

Coming soon...

Publications:

-

301 Moved Permanently 301 Moved Permanently

CloudFront -

301 Moved Permanently 301 Moved Permanently

CloudFront -

301 Moved Permanently 301 Moved Permanently

CloudFront -

301 Moved Permanently 301 Moved Permanently

CloudFront

Previous student projects:

- Peter Oberhauser - Advanced Omni-directional Distance Scanner for the Eye-bot (Master Project completed January 2009)

- Kasper Leuenberger & Philippe Bérard - Micro-Quadrotor Mach2 (Semester Project completed January 2009)

- Nicolas Wicht - Micro-GPS for quadrotor waypoint navigation (Semester Project completed January 2009)

- Simon Fivat & Lucas Oehen - Micro-Quadrotor (Semester Project completed June 2008)

- Peter Oberhauser - Omni-directional Distance Scanner for the Eye-bot (Semester Project completed January 2008)

- Adam Klaptocz - Omni-Directional Vision for Hovering MAV (Semester Project completed June 2007)

Video Demonstrations:

Information about the videos can be found on uTube, simply double click the video.

Eye-bot V2 - Distance scanner demo:

Eye-bot V2 - Ceiling attachment demo:

Eye-bot V2 - Stairwell demo:

Eye-bot V1 - On-board Camera demo:

Eye-bot V1 - Stability demo:

Eye-bot - coaxial helicopter demo: